Knowledge base

February 25, 2023

Microsoft wants ChatGPT to control robots next

As part of an ongoing collaboration with OpenAI, the makers of ChatGPT, Microsoft’s next plan is to use the chatbot to control robots.

“Our goal with this research is to see if ChatGPT can think beyond text and reason about the physical world to help with robotics tasks,” Microsoft said this week in a blog post. “We want to help people interact more easily with robots, without having to learn complex programming languages or details about robotic systems.”

Robots run our world, from the mechanized weaponry in factories to the sad, mechanized robo-dusters that clean my uncle’s floor. If you want a robot to do something new, you need an engineer with advanced technical knowledge to write code and run multiple tests.

But imagine a world where you can communicate directly with the robots and give them commands in plain English. What if robots understood the laws of physics? That would be good, right? Microsoft thinks so, and they’re probably right.

Microsoft published a paper with a new set of design principles for using a large language model such as ChatGPT to give robots instructions. The company’s framework begins by defining a list of high-level tasks a robot can perform, writing a prompt that ChatGPT has translated into robot language and then running a simulation of the robot according to your instructions. You modify it until the robot gets it right and then implement the completed code on your robot friend.

If that sounds simple, it is because Microsoft is fixing a phenomenally complex technical problem and they have posted some nice videos as proof of concept.

On the other hand, they are already using ChatGPT to run drones, and you won’t get flying Terminators until Terminator 3: Rise of the Machines, so Microsoft’s engineers may be punching ahead.

Admittedly, this is all a lot sexier than another update to Office 365.

What exactly can ChatGPT do?

Let’s look at a few examples … You can find more case studies in the coderepository.

Zero-shot task scheduling

We gave ChatGPT access to functions that control a real drone, and it proved to be an extremely intuitive language-based interface between the non-technical user and the robot. ChatGPT asked clarification questions when user instructions were ambiguous and wrote complex code structures for the drone, such as a zigzag pattern to visually inspect shelves. It even figured out how to take a selfie!

We also used ChatGPT in a simulated industrial inspection scenario using the Microsoft AirSim simulator. The model was able to effectively parse the user’s high-level intent and geometric cues to accurately control the drone.

User informed: when a call is needed for a complex task

We then used ChatGPT in a manipulation scenario with a robotic arm. We used conversational feedback to teach the model how the APIs originally provided could be compiled into more complex high-level functions: which ChatGPT coded itself. Using a curriculum-based strategy, the model was able to logically link these learned skills to perform operations, such as stacking blocks.

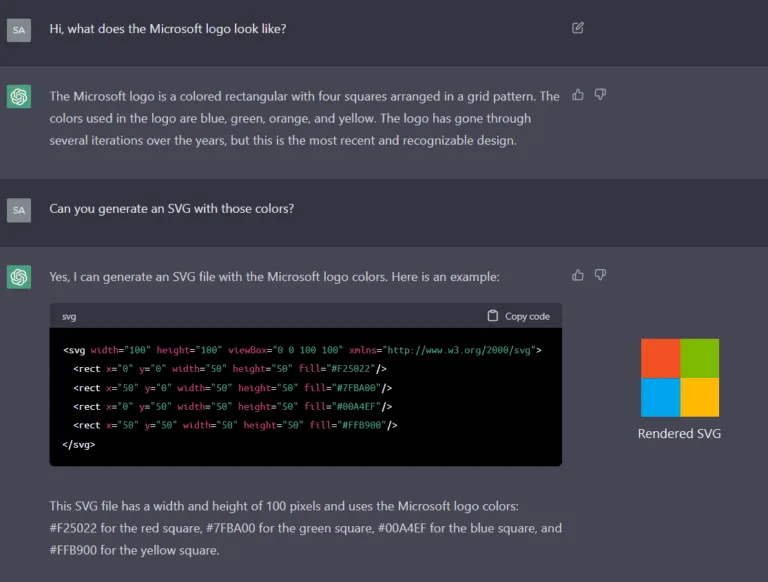

Moreover, the model showed a fascinating example of bridging the textual and physical domains when tasked with building the Microsoft logo out of wooden blocks. Not only was it able to recall the logo from its internal knowledge base, it was also able to “draw” the logo (as SVG code) and then use the skills learned above to figure out which existing robot actions could compose its physical form.

We then tasked ChatGPT with writing an algorithm for a drone to reach a target in space without colliding with obstacles. We told the model that this drone has a forward facing distance sensor and ChatGPT immediately coded most of the key building blocks for the algorithm. This task required human conversation, and we were impressed by ChatGPT’s ability to make localized code improvements using only language feedback.

Want to know more?

Related

blogs

Tech Updates: Microsoft 365, Azure, Cybersecurity & AI – Weekly in Your Mailbox.