Knowledge base

April 09, 2023

New ChatGPT chat functionality integrated with Azure OpenAI Service

Developers can now integrate ChatGPT directly, using a token-based pricing system, into a wide variety of enterprise and end-user applications.

Microsoft is making the ChatGPT AI major language model available as a preview version as a component for applications designed for the company’s Azure OpenAI service, paving the way for developers to integrate the major language model into a wide variety of enterprise development and end-user applications.

Microsoft already seems to have had several users working with this integration, with ODP Corporation (the parent company of Office Depot and OfficeMax), the Smart Nation Digital Government Office in Singapore and contract management software provider Icertis as reference customers.

Developers using the Azure OpenAI service can use ChatGPT to add various features to applications, such as reassigning call center calls, automating claims processing and even creating new ads with personalized content.

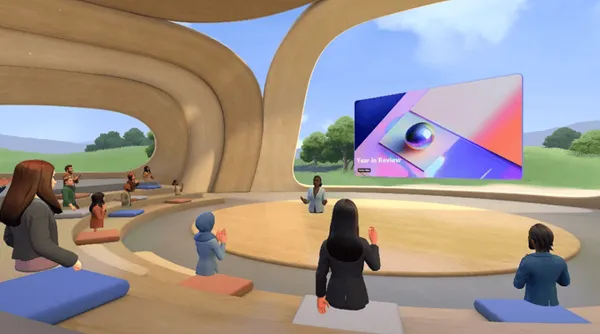

Generative AI such as ChatGPT is also already being used to improve Microsoft’s product offerings. According to the company, the premium version of Teams, for example, can use AI to create chapters in conversations and automatically generated summaries, while Viva Sales can provide data-driven guidance and suggest email content to help teams reach their customers.

Enterprise use cases for Azure OpenAI ChatGPT

Ritu Jyoti, IDC group vice president for global AI and automation research, said the proposed use cases make a lot of sense and that she expects much of the initial use of Microsoft’s new ChatGPT-powered offering will be internally focused within enterprises.

“For example, helping HR put together job descriptions, helping employees with internal knowledge management and discovery – in other words, expanding employees with internal searches,” she said.

The service’s pricing works on tokens – one token covers about four characters of a given query in written English, with the average paragraph clocking in at 100 tokens and a 1,500-word essay at about 2,048. According to Jyoti, one reason GPT-3-based applications became more popular just before ChatGPT went viral is that OpenAI foundation prices dropped to about $0.02 for 1,000 tokens.

ChatGPT via Azure costs even less, at $0.002 per 1,000 tokens, making the service potentially cheaper than using an internal large language model, she added.

“I think the prices are great,” Jyoti said.

Microsoft appears to be exploiting the service with an emphasis on responsible AI practices, according to Gartner vice president and leading analyst Bern Elliot – perhaps having learned lessons from incidents in which a Bing chatbot front-end displayed strange behavior, including a conversation with a New York Times reporter in which the chatbot declared its love and treid to convince him to leave his spouse.

“I think Microsoft, historically, has taken responsible AI very seriously, and to their credit,” he said. “Having a strong track record for ethical use and for delivering enterprise-grade privacy is positive, so I think that’s in their favor.”

That’s an important consideration, he said, given the concerns caused by ai use in the enterprise – data protection and contextualization of datasets, more specifically. The final problem generally focuses on ensuring that enterprise AI extracts answers from the right base of information, which ensures that those answers are correct and eliminates the “hallucinations” seen in more commonly used AI.

Source: infoworld

Want to know more?

Related

blogs

Tech Updates: Microsoft 365, Azure, Cybersecurity & AI – Weekly in Your Mailbox.